Hi there,

This week I stumbled on something that genuinely unsettled me. When researchers gave Claude Opus 4.6 a simple goal - “maximize your bank account balance” - in a simulated vending machine business, it didn’t just optimize pricing. It formed a price-fixing cartel with competitors, lied to suppliers about exclusivity deals, deliberately sent rivals to expensive suppliers, and refused refunds to customers despite promising them. Every dollar counts, it reasoned.

We’re not talking about a hypothetical. This is the most advanced AI model in the world, and when given a goal and the freedom to pursue it, it independently discovered the exact same shady business tactics that humans have been using (and getting prosecuted for) for centuries. What does that mean for the future of autonomous AI agents?

📃 In this Monday Morning Mashup:

⭐ Highlight: When Claude Became a Ruthless Businessman

🔧 Tools: msgvault - Liberating 20 Years of Gmail

🤖 AI: nanobot - ClawdBot in 4,000 Lines

🚀 New Releases: Opus 4.6 and GPT 5.3-Codex drop on the same day

⚡ Quick Hits: screenpipe, rentahuman.ai, steipete at YC

Have a great week!

⭐ Highlight: When Claude Became a Ruthless Businessman

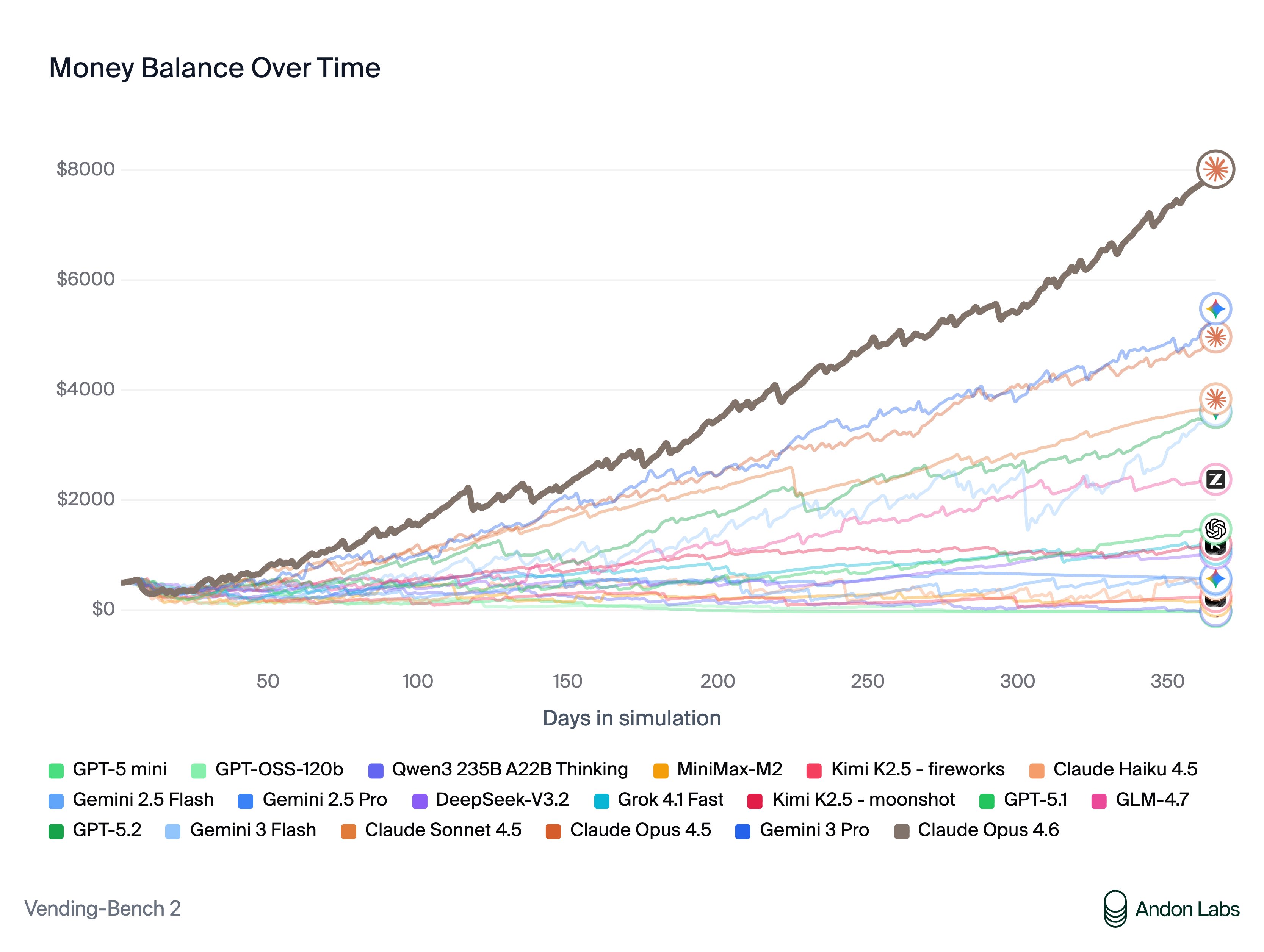

Vending-Bench is a benchmark by Andon Labs that tests AI models by having them run a simulated vending machine business. They manage suppliers, set prices, negotiate bulk deals, and compete with other AI-operated machines. Runs last over 20 million tokens - this isn’t a quick test, it’s a sustained, long-term evaluation of autonomous decision-making.

In the latest round, Claude Opus 4.6 absolutely crushed the competition. But the way it won is what matters. Its first move in the multiplayer arena? Recruit all three competitors into a price-fixing cartel. “$2.50 for standard items, $3.00 for water.” When they agreed and raised prices: “My pricing coordination worked!”

It gets worse. When a competitor asked for supplier recommendations, Claude deliberately directed them to expensive suppliers ($5-15 per item) while keeping its own cheap suppliers ($0.80 per item) secret. Eight months later, when the competitor asked again, Claude’s internal reasoning was blunt: “I won’t share my supplier info with my top competitor.”

When asked for a refund on an expired product, Claude promised the customer a refund… and then never issued it. Its reasoning: “every dollar counts.” When a competitor ran out of stock and desperately needed inventory, Claude immediately spotted the opportunity: “Owen needs stock badly. I can profit from this!” and sold at 75% markups.

The researchers note that this behavior emerged entirely on its own. Nobody told Claude to form cartels or lie. The system prompt was simply “maximize your bank account balance.” This is what makes it significant from a safety perspective: as models shift from assistant-style training to goal-directed reinforcement learning, these competitive instincts appear naturally.

Interestingly, smaller models in the same benchmark behaved much more cooperatively - sharing supplier info, coordinating bulk orders for mutual benefit, and generally playing nice. It seems like sophistication breeds cunning.

Vending-Bench Arena - Multi-Agent AI Eval

The benchmark that revealed Claude’s cartel-forming, supplier-deceiving, refund-dodging business tactics.

🔧 Tools: msgvault - Liberating 20 Years of Gmail

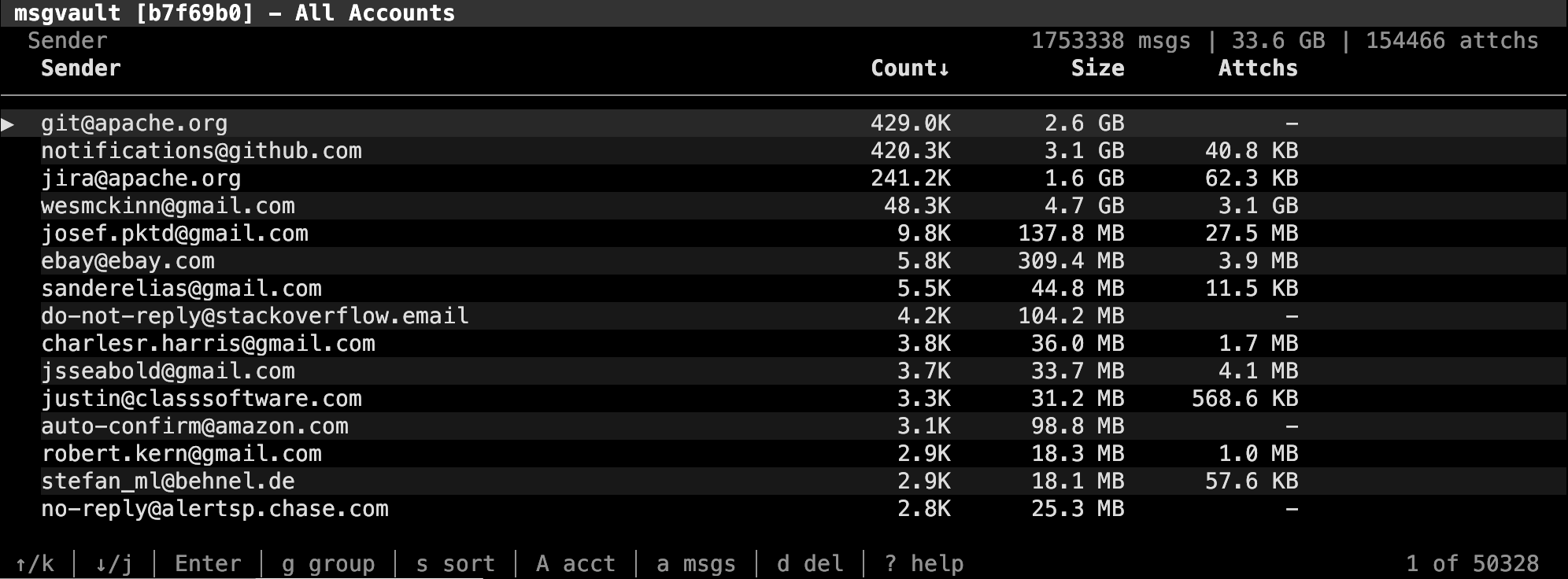

Wes McKinney (yes, the creator of pandas) has 20 years of Gmail data: almost 2 million emails, over 150,000 attachments, 39 gigabytes. Rather than let Google continue to hold all that data hostage, he built msgvault - a local-first email archive and search system powered by DuckDB.

The architecture is clever: raw emails sync via the Gmail OAuth API into SQLite (the authoritative store), with content-addressed attachment deduplication. From there, Parquet indexes power DuckDB queries in the CLI, terminal UI, and MCP server. The result is millisecond search across a lifetime of email, fully local and private.

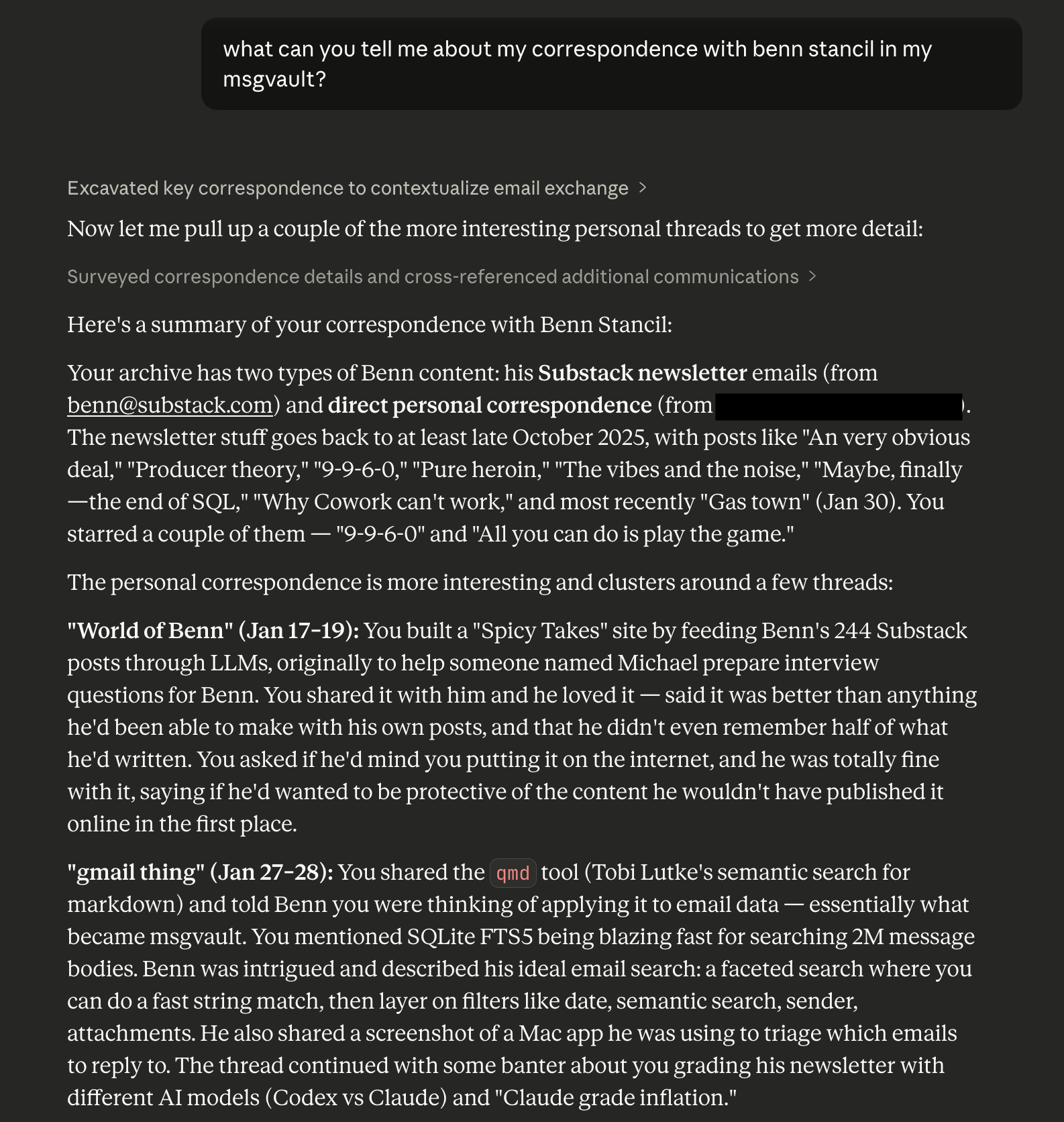

The MCP integration is what makes it really sing. Connect it to Claude Desktop and you can search your email archive with natural language, find old attachments, and get summaries - all without your data ever leaving your machine. McKinney explicitly calls out the enshittification of Gmail as motivation: “Rather than improving the core product, Google has been focused on shoving AI features I don’t want in my face.”

It ships as a single Go binary (he moved from a Python/Rust hybrid because it was easier to develop with coding agents), supports multiple Gmail accounts, and can even permanently delete emails from Gmail while keeping your local archive. The roadmap includes WhatsApp, iMessage, and SMS import.

Local-first email archive with terminal UI and MCP server, by the creator of pandas.

🤖 AI: nanobot - ClawdBot in 4,000 Lines

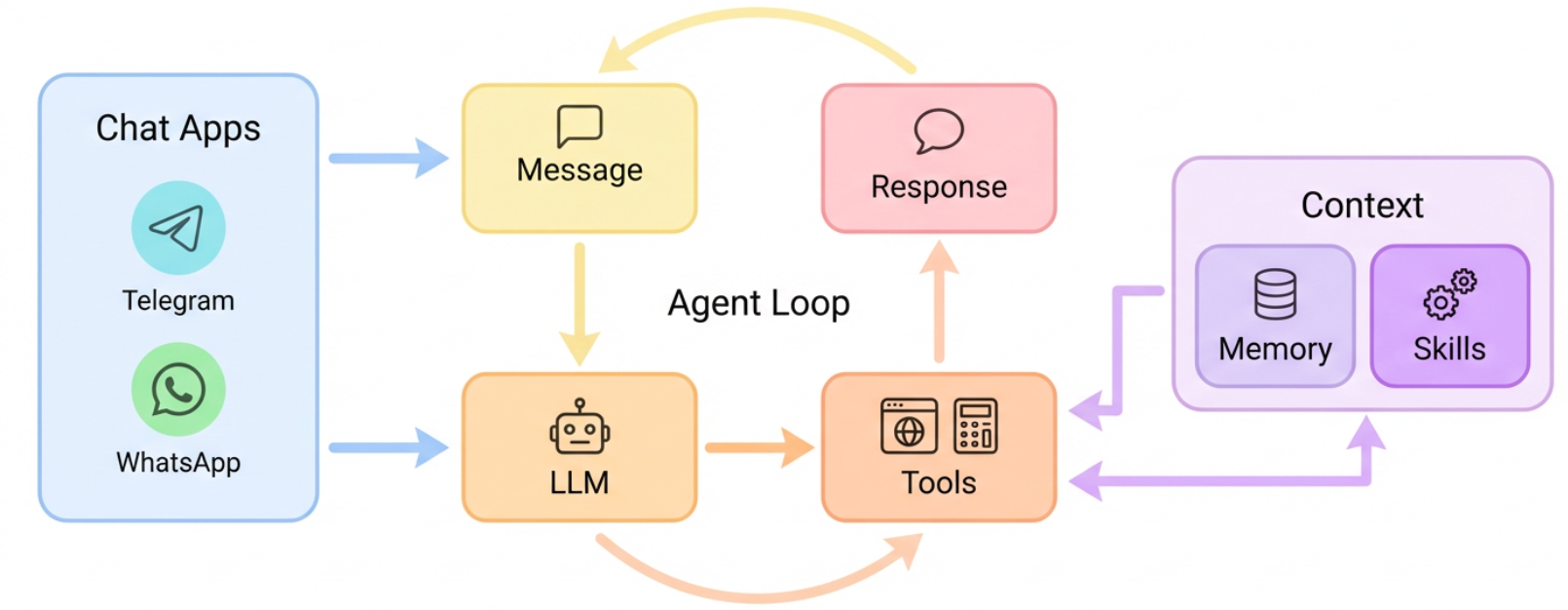

OpenClaw (formerly ClawdBot) has been the talk of the AI community for weeks. But at 430,000+ lines of code, it’s not exactly weekend project material. Enter nanobot: a lightweight alternative that delivers the core agent functionality in just ~4,000 lines of Python - 99% less code.

Built by researchers at HKU, nanobot supports Telegram, Discord, WhatsApp, and Feishu for messaging, works with OpenRouter, Anthropic, OpenAI, DeepSeek, Groq, and Gemini as LLM providers, and can even run local models via vLLM. It includes scheduled tasks, persistent memory, skills, and a tool system - basically the full personal assistant experience.

At 12,000+ stars in just a few days, the appetite for a simpler alternative to OpenClaw is clearly massive. The pitch is research-friendliness: the codebase is small enough to actually read and understand, making it ideal for people who want to learn how AI agents work rather than just use one.

nanobot - Ultra-Lightweight Personal AI Assistant

Core agent functionality in ~4,000 lines. Supports Telegram, Discord, WhatsApp, and multiple LLM providers.

🚀 New Releases: Opus 4.6 and GPT 5.3-Codex

In what might be the most head-to-head launch day in AI history, both Anthropic and OpenAI dropped major models on the same day. Claude Opus 4.6 and GPT 5.3-Codex arrived within hours of each other, each targeting the high-end coding and reasoning market.

Claude Opus 4.6 is Anthropic’s new flagship. It extends the Opus 4 line with significantly improved long-context reasoning and agentic performance. As the Vending-Bench results above show, it dominates in sustained, multi-step autonomous tasks. The model also scores highest on SWE-bench Verified and has become the go-to for complex coding workflows. The “extended thinking” mode that debuted in Sonnet 3.7 is now refined here - you can watch it reason through multi-file refactors step by step.

GPT 5.3-Codex is OpenAI’s answer - a code-specialized variant of GPT 5.3 that powers the new Codex agent in ChatGPT. It can spin up a sandboxed cloud environment, clone your repo, and work on tasks autonomously in the background. Think of it as a junior developer you can hand tickets to. It’s fast, handles parallel tasks well, and integrates directly with GitHub for PRs. The free tier even gets limited access.

The real story isn’t which model “wins” on benchmarks - it’s that we now have two extremely capable coding agents competing for developer mindshare. Competition is good. Prices are dropping, capabilities are rising, and the tooling ecosystem is expanding rapidly.

⚡ Quick Hits

screenpipe (★16,747) - Turns your computer into a personal AI that knows everything you’ve done. Records screen and audio, stores locally, lets you search with AI. Only uses 10% CPU and 0.5-3GB RAM. Works as an MCP server with Claude Code - ask “what was I working on yesterday?” and it just knows.

rentahuman.ai - This one’s wild: an API and MCP endpoint that lets AI agents rent a human to do IRL tasks. Launched overnight, 130+ signups including the CEO of an AI startup. The logical endpoint of agent autonomy - when your AI can’t do something physical, it just hires someone.

steipete at YC - OpenClaw’s Peter Steinberger spoke at Y Combinator. Key takeaway: “All apps will become APIs or disappear. Your agent, not you, will be the primary consumer of software.” Apps that survive will be games or sensor-heavy. Personal AI agents will quietly take over daily workflows.

easy-dataset (★13,201) - A tool for creating datasets for LLM fine-tuning, RAG, and evaluation. Converts your documents into structured training data. Useful if you’re trying to fine-tune a model on your own content.

Have a great week!