Hi there,

This week felt very “real world” to me: less vague AI hype, more concrete signs that agent workflows are getting useful, cheaper, and easier to operationalize.

📃 In this Monday Morning Mashup:

⭐Highlight: GPT-5.2 and an actual physics result

🤖AI: MiniMax M2.5 pushes agent economics hard

🔧Tools: TrustGraph + Rowboat for context and memory

🌐Web: Local speech-to-text for Home Assistant

Have a great week!

⭐Highlight: AI-assisted theoretical physics moved from demo to preprint

One of the most interesting updates this week is OpenAI’s physics preprint showing a nonzero result for a gluon amplitude configuration many researchers had generally treated as absent. What makes this notable is not just the claim, but the workflow: model conjecture, scaffolded model proof attempt, and human verification by domain experts.

This feels like a good template for near-term AI-in-science: not replacing researchers, but accelerating “pattern finding + formal checking” loops in hard technical domains.

GPT-5.2 derives a new result in theoretical physics

OpenAI’s write-up and preprint link describing the result, methodology, and collaborating researchers.

🤖AI: MiniMax M2.5 is a strong reminder that price-performance is the battlefield

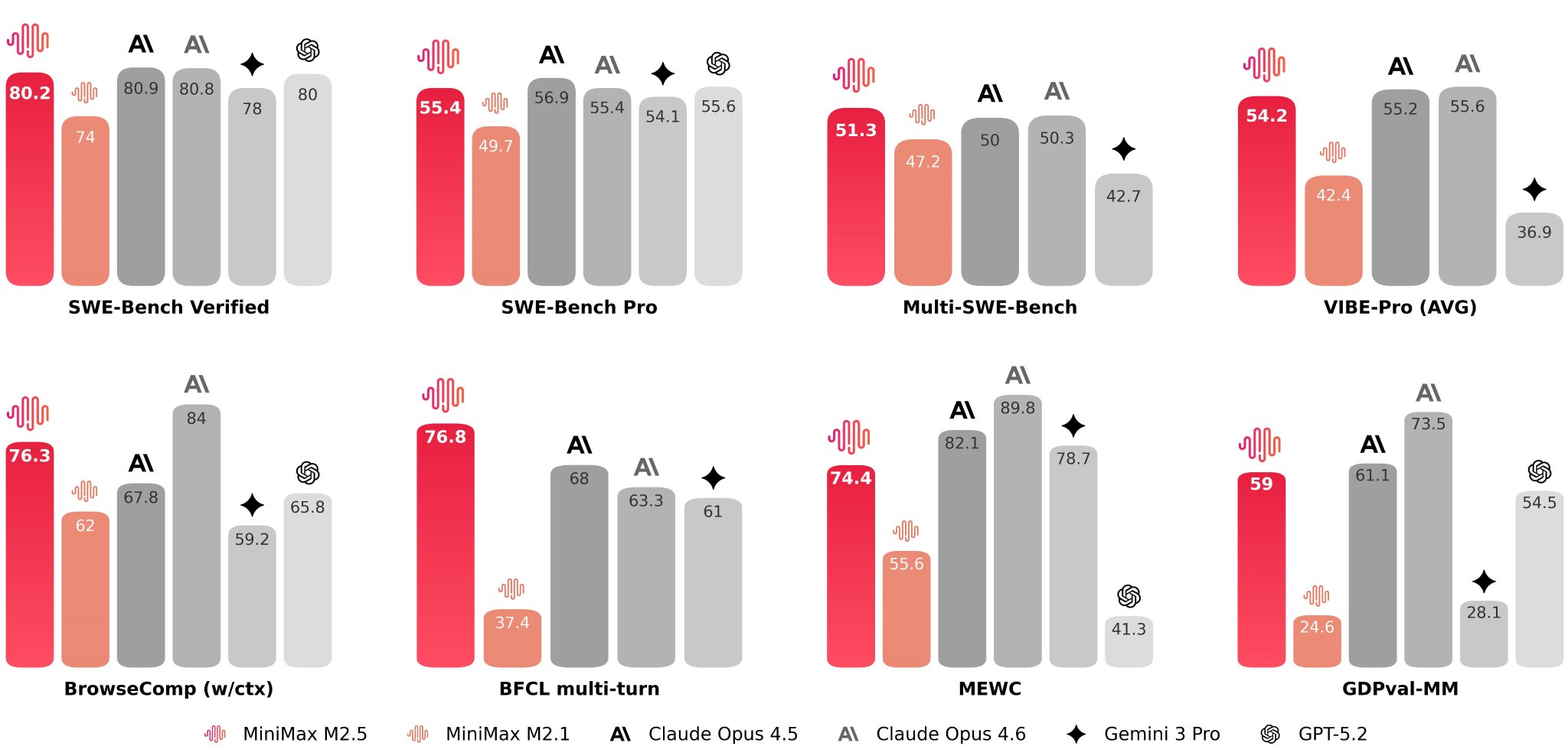

MiniMax’s M2.5 launch is very explicit about the target: real-world agent workloads that run continuously without blowing up cost. Their published benchmarks and pricing claims put a spotlight on execution speed and tool-using performance, not just static leaderboard snapshots.

Even if you discount some vendor-reported metrics, the broader trend is clear: teams are optimizing for full-task throughput and total cost of ownership for long-running agents.

MiniMax M2.5: Built for Real-World Productivity

Launch announcement covering SWE-Bench/BrowseComp numbers, RL training setup, and agent-focused pricing.

🔧Tools: Better context layers are becoming a product category

Two projects from this week’s feed point in the same direction. TrustGraph focuses on graph-powered context harnessing (GraphRAG plus ontology-driven context). Rowboat focuses on local, inspectable memory as an editable knowledge graph that compounds over time.

My read: we’re moving past “chat with tools” toward systems where context engineering is the core advantage. The winning setups will likely combine retrieval, structured memory, and explicit user-editable state.

Graph-powered context harness for AI agents with GraphRAG APIs and ontology-driven context pipelines.

Open-source local-first AI coworker that turns work artifacts into a persistent, editable knowledge graph.

🌐Web: Local voice interfaces keep getting better

A practical gem from the Reddit + GitHub set: a Home Assistant add-on integrating streaming ONNX speech-to-text with a privacy-first local pipeline. It’s a good example of where agent UX often wins or loses - input quality, latency, and reliability.

If local voice stacks keep improving on commodity hardware, we’ll likely see many more ambient assistants that are useful without sending every utterance to the cloud.

kroko-ai/kroko-onnx-home-assistant

Home Assistant-focused STT/TTS integration with streaming ONNX speech pipelines and Wyoming support.

⚡Quick Hits

tambo-ai/tambo - Generative UI SDK for React with component-driven agent interfaces and streaming props.

github.com

openai/skills - A growing skills catalog pattern for packaging repeatable agent capabilities.

github.com

alehkot/android-emu-agent - Early but interesting CLI/daemon approach for Android emulator control loops.

github.com

Have a great week!